If you're a prompt-first developer building agentic applications, you know the pain:You want fast iteration in your IDE but then comes the infrastructure headache.Vector stores, chunking strategies, compliance scanning, secure retrieval, model selection, cost controls, guardrails, rate limiting, observability… the list never ends.

Most teams waste months (and burn serious budget) stitching together connectors, managing secrets, fighting hallucinations, and praying the backend doesn't break when regulations or data volume change.

With Katara, you get an AI-managed, compliant RAG backend that is purpose-built for developers who want to stay in flow not become accidental DevOps engineers.

And the fastest path to production-grade retrieval? One-click MCP setup.

Just want the docs? Go Here

1. Open ChatGPT on web (install will not work in desktop)

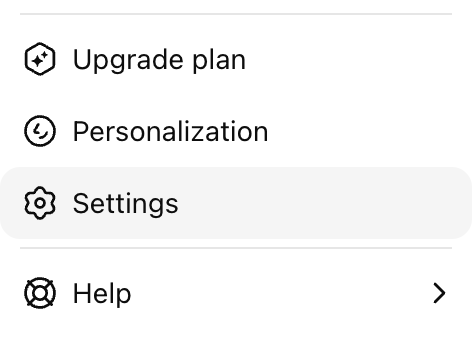

2. Go to Settings

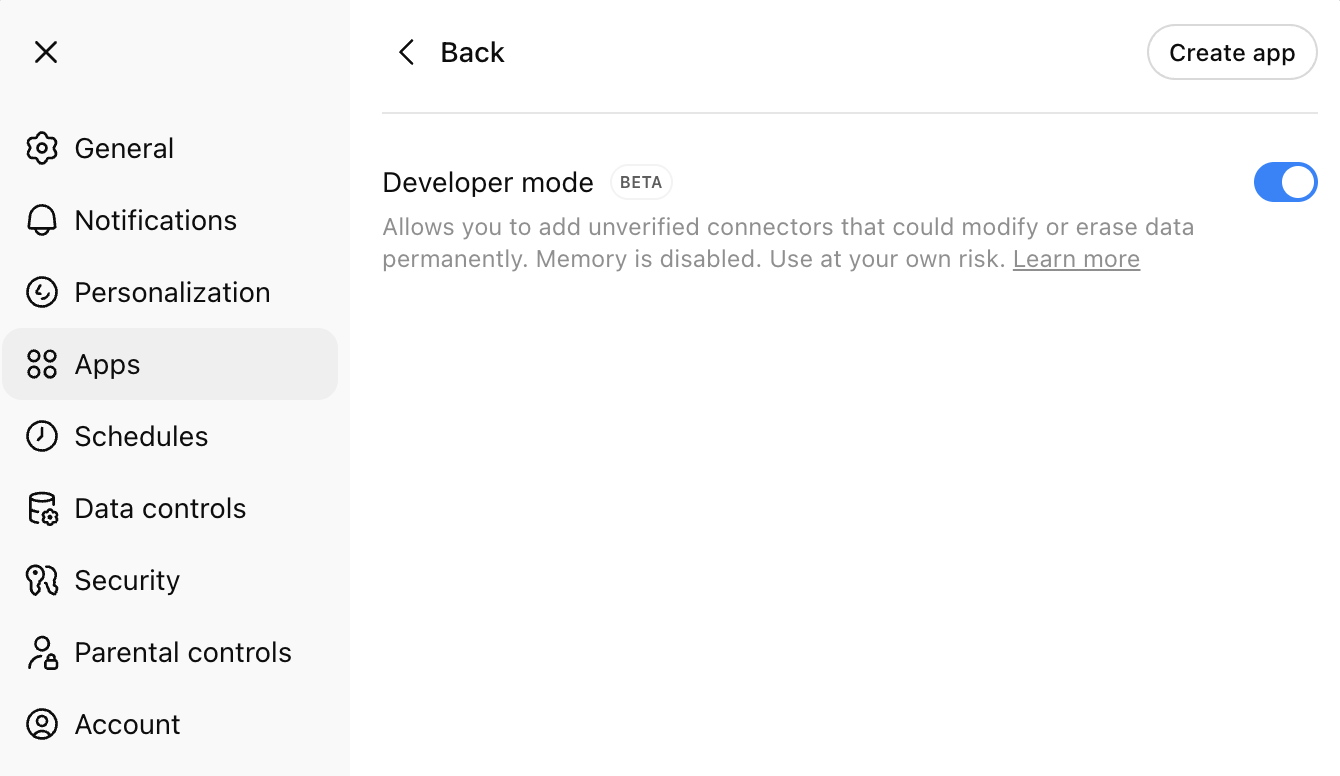

3. Make sure developer mode is enabled. Navigate > Apps > Advanced

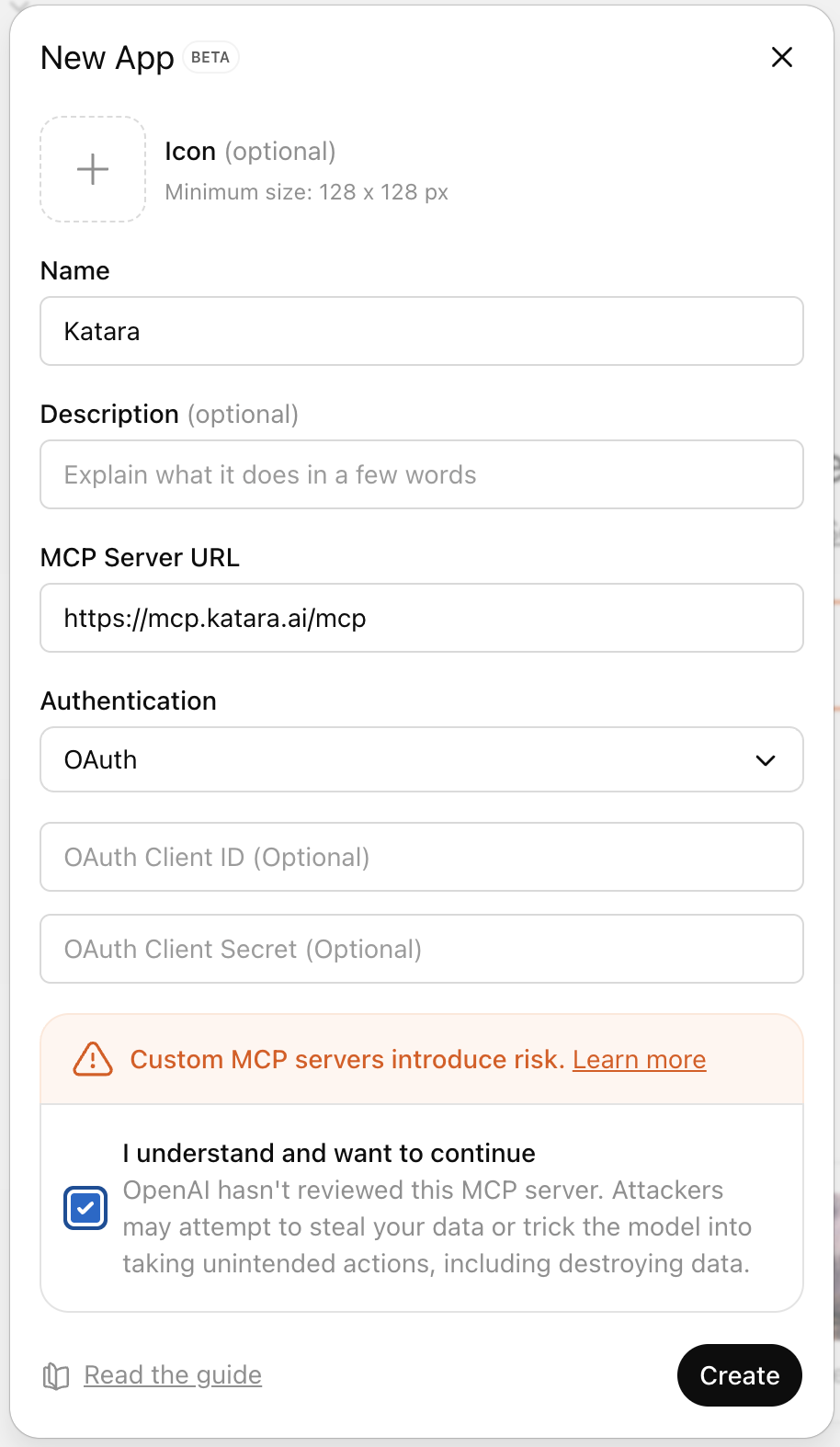

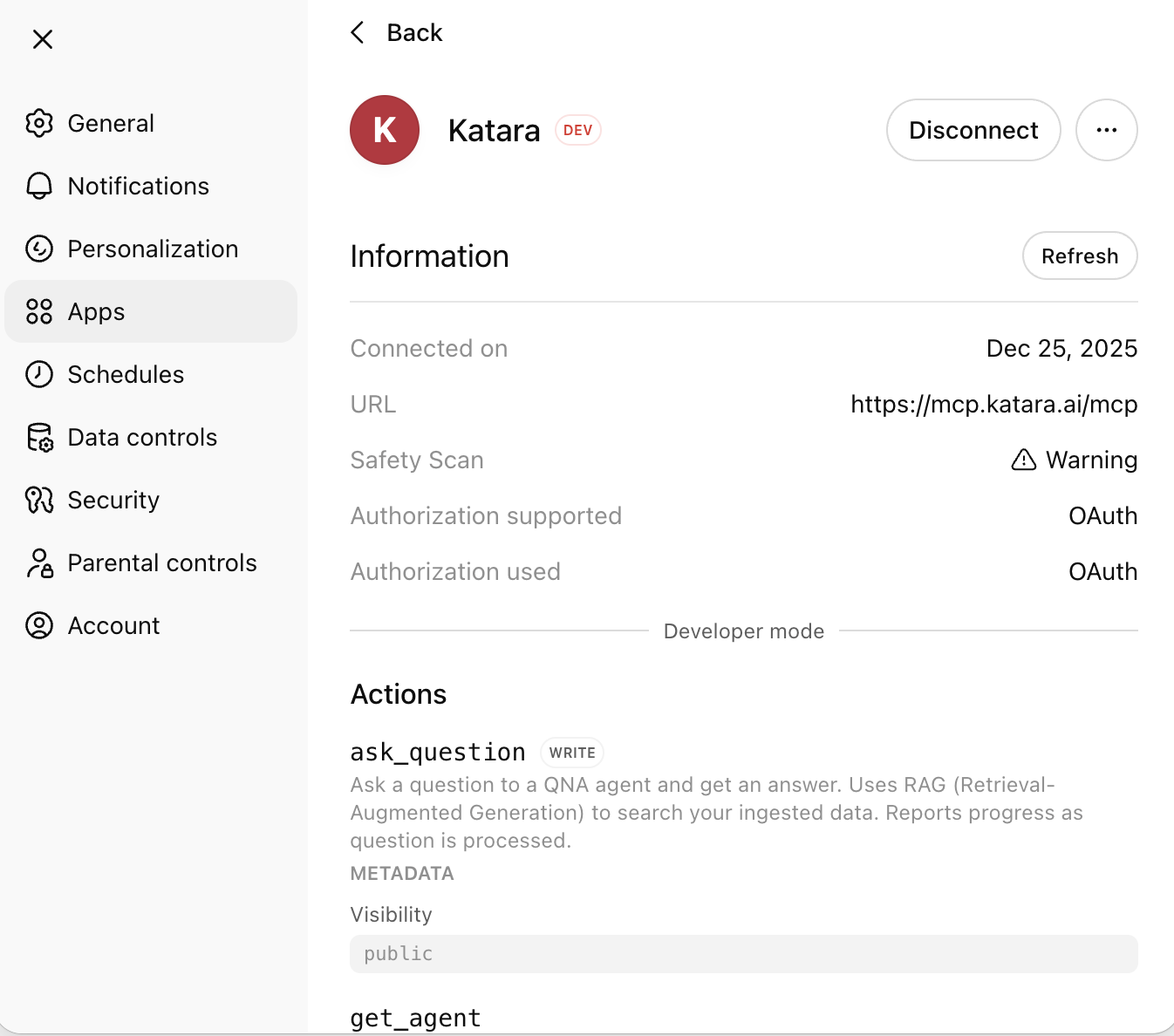

4. Click Create App

5. Enter a name (eg 'Katara')

Enter MCP server URL:

https://mcp.katara.ai/mcp

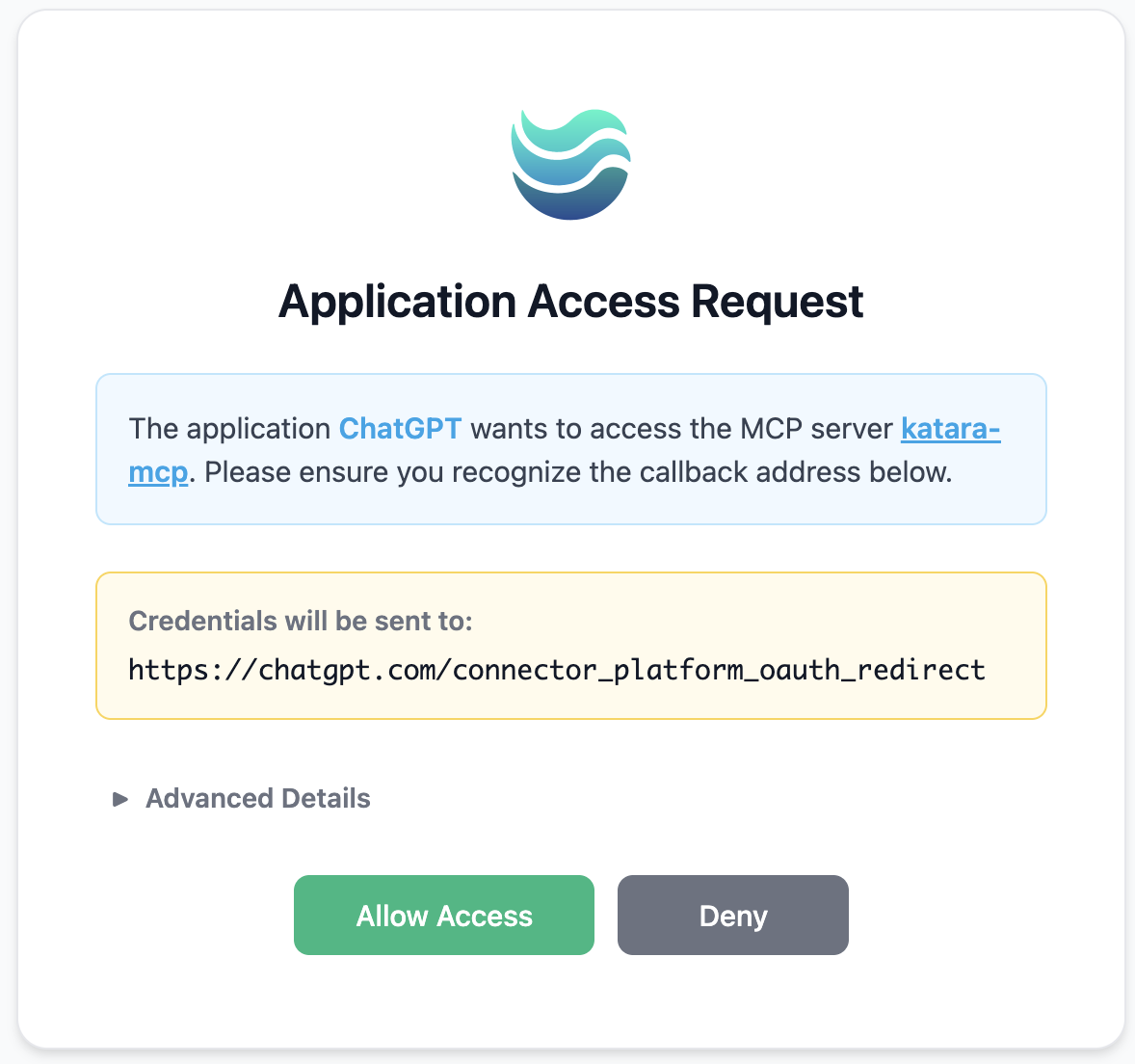

6. Click Create and then Authenticate

8. Your MCP Server will be enabled in ChatGPT (you can now use Katara MCP tool in ChatGPT desktop)

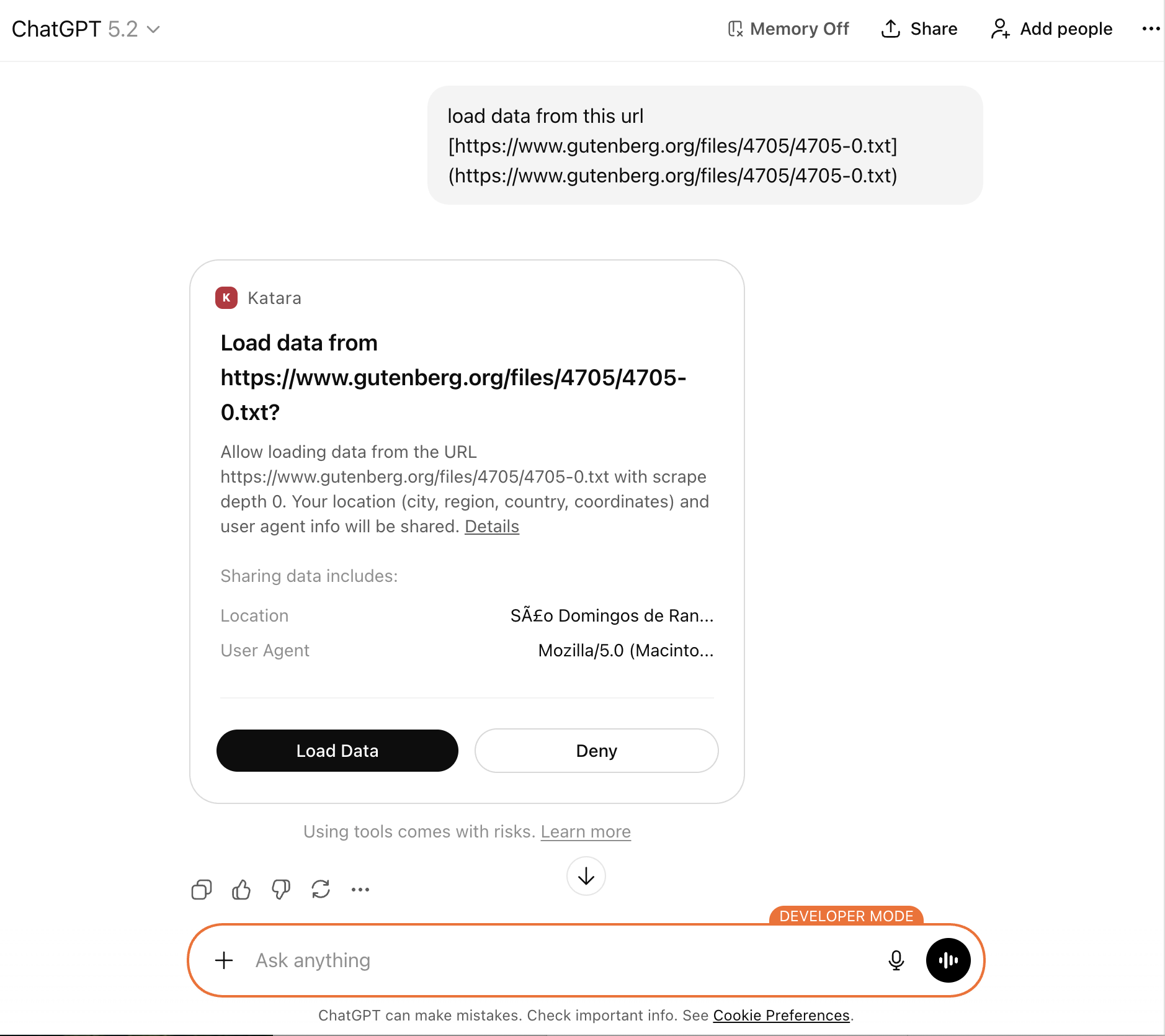

Test it out with some prompts. Here are some examples to try

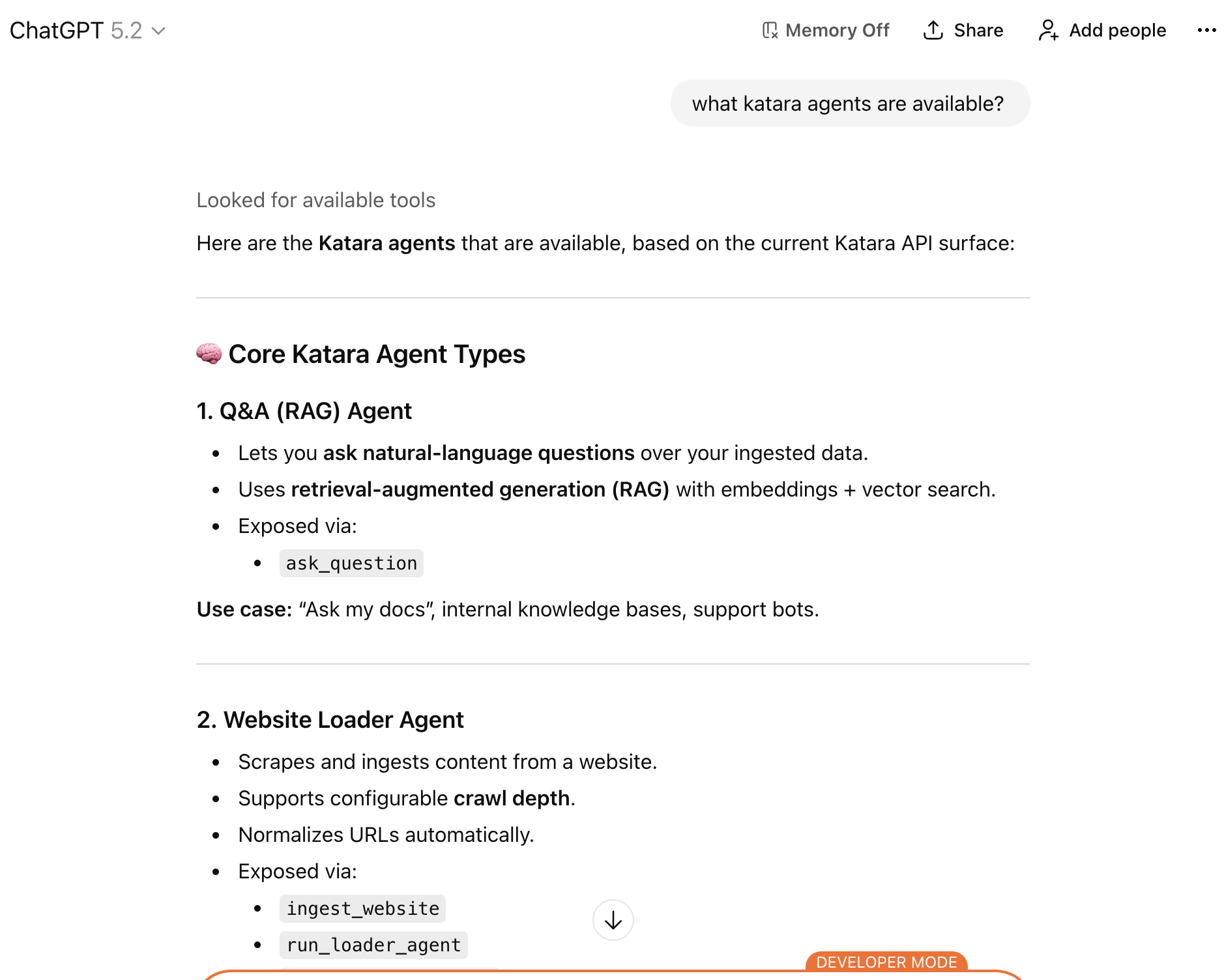

"What Katara Tools Are Availible?

"Tell me about my data in Katara?

"What Katara agents are availible?

"Load data from this url to add it to my katara collection [url]"

MCP is enabled by default once you create an MCP server for a collection, no extra configuration required for core retrieval functionality. Test it out by trying a chat in Cursor.

MCP turns your processed Katara collections into instant, plug-and-play knowledge bases for any MCP client

HAPPY BUILDING!

The Model Context Protocol (MCP) introduced by Anthropic in late 2024 and rapidly adopted across the ecosystem (OpenAI, Google DeepMind, Claude Desktop, Zed, Cursor, Replit, Sourcegraph, and many more) is the closest thing we have to USB-C for AI applications.

MCP standardizes how LLMs and agentic tools access external context: files, databases, vector stores, APIs, logs, tools, everything.

Instead of writing (and maintaining) a custom connector every time a new LLM client, IDE plugin, or agent framework appears, you implement one MCP server and suddenly your data & capabilities become instantly available to the entire exploding MCP ecosystem.

That's massive future-proofing.

Katara takes this one step further:

No more:

You get one amazing RAG backend that works natively with the entire modern AI toolchain.

If you're tired of RAG plumbing stealing your build time, and you want a backend that actually helps you ship faster and safer, Katara is currently the easiest path to production-grade agentic apps with enterprise-grade safety baked in